watonomous.github.io

[ Software Division : Design Draft for Traffic Lights ]

Created by [ Frank Wu] on Jul 06, 2020

Henry's Design Draft

This design document proposed the architecture and data flow for a service that interfaces with Perception and Path Planning to make sense of traffic light detections. Although Perception is able to detect many traffic lights in front of the vehicle, it's not obvious which traffic light's signals should be obeyed. This selection depends on the current lane of the vehicle. The proposed service will do the logic to figure out which detected traffic light's signal applies to the vehicle. More succinctly put, the service seeks to associate each detected traffic light with some lane.

This document assumes that the reader has some familiarity with the HERE Lane Geometry Topology Layer, ROS services, and exposure to the HERE Traffic Light JSON file.

Do we merge traffic lights into the OSM file? Or keep it seperate.

Service Overview

This program will be implemented as a service instead of the typical ROS publisher node that continuously forwards garbage. Once again, we apply the principle of "least privileged", a twisted interpretation is that you only get what you need to know. The only query supported will be:

TrafficLight getTrafficLight(here_lane_group_id, here_lane_index)

where here_lane_group_id and here_lane_index are exactly what you think they are, concepts from the Lane Geometry Layer. These 2 identifiers uniquely identify the entry lane that the vehicle is on. The reply of this query is some format to represent traffic light signals that allows Path Planning to execute some action. In plain English, this query is interpreted as, given some unique identifier for the entry lane that I'm on, tell me the current traffic light signal.

Inputs

This service handles 2 major sources of data. Traffic light detections from Perception and traffic light mappings from HERE HD Maps.

Assume that Perception produces bounding box detections of traffic lights in an image and that their world frame position(s) can be precisely (but not accurately) estimated based on camera extrinsics. Recall the distinction between accuracy and precision. If the focal length and sensor size of the camera and sensor are known, along with the dimensions of the object, triangulation can be used to estimate an objects distance from the camera. This information is to be transmitted with the TrafficLight ROS Message. We can assume that detections within an image are ordered from left to right, but it doesn't matter where the sorting is done. In reality we might have to apply some noise filter to the positions reported by Perception if they truly do lack precision. All this means is that the service has a dependency on object tracking. It's also likely that some detections will be missed, as well as the presence of false positives. Ohwell.

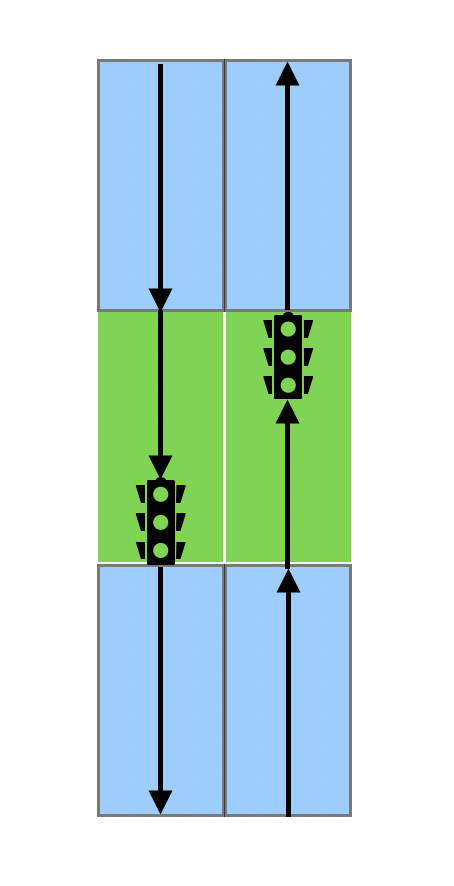

SAE provides traffic light mapping information in the form of a JSON file. See this example. The data format in this file is very intuitive and easy to understand. To summarize it simply, a traffic light is defined by some position, some light bulbs, and most importantly, the its lane associations. An excerpt of a lane association is shown below. Before trying to read it, it's important to understand how intersections are represented in the HERE Lane Geometry Layer. Traversing a classic traffic light controlled intersection requires traversing 3 lanes, the entry lane, the intersection lane, and the exit lane. Let me draw a picture for you. The green block is the intersection lane, the blue blocks are the entry and exit lanes. To spell it out for you, the bottom right lane is an entry lane, the top right lane is an exit lane. This concept applies if the intersection lane was a turning lane as well, I'm just too lazy to draw it. If you think about it, a particular traffic light controls multiple (entry lane, intersection lane, exit lane) tuples. But generally, a traffic light can be associated with one or more entry lanes. That's how we drive too, we're sitting in some entry lane, and we look at one traffic light.

[ {.confluence-embedded-image

height=”400”}]{.confluence-embedded-file-wrapper

.confluence-embedded-manual-size}

{.confluence-embedded-image

height=”400”}]{.confluence-embedded-file-wrapper

.confluence-embedded-manual-size}

Now back to the JSON file. This is how you read it. The START_PORTAL key refers to some identifier for the entry lane, this value is a concatenation of the the lane_group_id and the lane index. Same thing with the END_PORTAL, it identifies an exit lane. The HDLM_LANE_GROUP and HDLM_LANE_GROUP_INDEX together identify an intersection lane. In this excerpt, some traffic light has multiple lane associations, which makes sense.

"LANE_ASSOCIATIONS" : [

{

"END_PORTAL" : "PORTAL_6925022172_0",

"HDLM_LANE_GROUP" : "6212548049",

"HDLM_LANE_GROUP_INDEX" : 2,

"START_PORTAL" : "PORTAL_2745156711_1"

},

{

"END_PORTAL" : "PORTAL_1350341518_0",

"HDLM_LANE_GROUP" : "290611284",

"HDLM_LANE_GROUP_INDEX" : 2,

"START_PORTAL" : "PORTAL_2745156711_1"

}

],

Implementation and Data Flow

Parse JSON file

Parse the JSON file to create the following mappings to allow for fast lookup:

- (here_lane_group_id, here_lane index) : traffic_light. The key is derived from START_PORTAL, the value is some traffic light data structure .

- here_lane_group_id : set(traffic_light). The key is derived from the START_PORTAL, the value is some set of traffic light data structures.

It's likely that only one mapping will be necessary, but this selection depends on the accuracy and precision of Perception position estimates.

Matching

Given Perception detections, the traffic light mappings, and the query, run a matching algorithm to determine which traffic light detection applies to the vehicle. This step strongly depends on the accuracy and precision of traffic light positions provided by Perception. There are 2 approaches to this depending on the integrity of Perception data, that is accuracy of position estimates, false positive rate, missed detection rate.

High Position Accuracy

Look up the mapping of (here_lane_group_id, here_lane index) to traffic_light. Match this traffic light to the physically closest detected traffic light. Done.

Low Position Accuracy

It's no longer sufficient to do matching based on distance minimization from the mapped traffic light to a detected traffic light. Look up the mapping of [(here_lane_group_id, here_lane index) to set(traffic_light)]{.inline-comment-marker data-ref=”c61ad619-e350-43f4-b3a7-e9d5b7eb82d8”}. We need to match objects between 2 sets. This kind of resembles the stable marriage problem, which is sort of well researched, but with the restriction that both sets of traffic lights aren't really sets as they are ordered from left to right (from the perspective of the vehicle). This constraint eliminates many of the possible matches, which makes this problem even easier.

Prior to matching it may also be necessary to apply filters to the position of traffic lights, and to maintain object permanency, that is, they must pass through object tracking.

Object Tracking

OT is completely broken and needs to be rewritten. Skip this stage for now.

Outputs

The query returns the usual TrafficLight ROS Message, there's no need to make a new interface.

Frank's Design Draft

This design document proposes the transmission of Traffic Light information and their lane associations to Path Planning. Prerequisite knowledge includes HERE lane group structure. For clarification, any use of the term [signal direction]{.inline-comment-marker data-ref=”26ca05ff-e885-4039-9d4a-cd5e8ca6b275”} refers to the directions that light up as green, yellow, red, etc... on a traffic light (i.e. a green left arrow signals that we can turn left). Traffic direction refers to the directions of travel which this traffic light controls (i.e. forward right means that on green, you may go forward or right).

Context:

At the moment, basic traffic light information (colour, signal direction, traffic direction) is published under the topic[[/traffic_light_detection and is consumed by the HLDF node. The traffic lights received from perception will be sorted (double checking this) from left to right. HLDF will pass this information to object tracking who will then match the traffic lights to a [3D bounding box]{.inline-comment-marker data-ref=”c6fdad4b-d404-4710-a7d7-4bbd3d9aa762”}, thereby giving the traffic light a [pose]{.inline-comment-marker data-ref=”161a458a-d2de-4f51-bd62-4874cf830adc”} as well. Path planning, however needs to know [exactly which lanes the traffic lights control]{.inline-comment-marker data-ref=”0b2783d7-0635-4ff2-9a9c-094f81e798e3”} to perform local path planning.]

SAE has provided us with traffic light lane associations in a JSON file which is separate from the HERE maps. In the file, we are provided with the longitude, latitude coordinates and the height of the four corners of the traffic light. For lane associations, an array of INTERSECTION HERE lane group IDs and lane indices are provided to signify which lanes the traffic signals will apply to. The lane groups could be turning lane groups so the lane associations by extension, also tell us the [traffic direction]{.inline-comment-marker data-ref=”31712520-f74a-4414-ad35-2487d21f0c47”} of the traffic lights. There are also START_PORTAL/END_PORTAL fields in the format "PORTAL_102257501006_4". It seems that 102257501006 does not correspond to a HERE lane group id so we will only use the HDLM_LANE_GROUP fields. We already have a python class which parses the JSON file for all of explicit relevant information.

Here is a summary of the information we get with respect to what we are concerned with:

"BOUNDARY" : [

{

"HEIGHT" : 304.5553411843093,

"LATITUDE" : 40.31895808481980,

"LONGITUDE" : -83.55987739033648

},

{

"HEIGHT" : 304.5553411843093,

"LATITUDE" : 40.31895523324126,

"LONGITUDE" : -83.55988314545556

},

{

"HEIGHT" : 306.6053411843093,

"LATITUDE" : 40.31895523324126,

"LONGITUDE" : -83.55988314545556

},

{

"HEIGHT" : 306.6053411843093,

"LATITUDE" : 40.31895808481980,

"LONGITUDE" : -83.55987739033648

}

],

...

"LANE_ASSOCIATIONS" : [

{

"END_PORTAL" : "PORTAL_102257501006_4",

"HDLM_LANE_GROUP" : "102205001039",

"HDLM_LANE_GROUP_INDEX" : 2,

"START_PORTAL" : "PORTAL_102240001004_1"

},

{

"END_PORTAL" : "PORTAL_102257501006_5",

"HDLM_LANE_GROUP" : "102205001039",

"HDLM_LANE_GROUP_INDEX" : 1,

"START_PORTAL" : "PORTAL_102240001004_0"

},

{

"END_PORTAL" : "PORTAL_102257501006_5",

"HDLM_LANE_GROUP" : "102292501039",

"HDLM_LANE_GROUP_INDEX" : 1,

"START_PORTAL" : "PORTAL_102182501003_0"

}

],

...

Solution:

First, we will augment our JSON file [parser]{.inline-comment-marker data-ref=”c836bf61-42f8-466a-9965-7a9bc3184d77”} to provide us with a bit more insightful information. The traffic lights need to be put into groups as we are interested in all the traffic lights which correspond to a given direction of travel. We will need to sort the traffic lights from left to right and after extracting the lane associations, we will determine the traffic directions which the traffic light controls based on the direction of its associated HERE lane groups. This information will come in handy later.

Now, let's consider how a human would normally approach traffic lights. When we see a traffic light, we know those traffic signals apply to the lane group we are currently on and we can associate the traffic light signals for a given direction with 1 or more lanes in our lane group. We typically ignore and are usually unable to see traffic lights corresponding to a lane group that we are not currently on. When we see the traffic lights as we cross the intersection, we ignore them as we are not supposed to stop in an intersection.

Our solution will be done in a similar way and we will also assume perception will not capture traffic lights corresponding to a lane group that we are not currently on. In hdmap_processing, we will create a new service which will accept a set of traffic lights we see and the current HERE lane [group ID]{.inline-comment-marker data-ref=”448645d7-c4b7-4ab7-9afe-13f660fd8573”} we are on and return the group of traffic lights closest to the traffic lights we pass in. The returned traffic lights will contain their associated HERE lane group IDs, lane indices and traffic directions which we can obtain from the JSON file mentioned above. Regardless of whether we send in 1 or more traffic lights, we will always get back an entire group of traffic lights as we will trust our maps more than perception.

[TrafficLight[]]{.inline-comment-marker data-ref=”50abf303-bd91-4cbd-b529-30d275b85ccc”} getTrafficLightGroup([TrafficLight[]]{.inline-comment-marker data-ref=”b34999a1-a554-4ade-9e6b-63d8102143f2”}, int32)

A possible implementation for [getTrafficLightGroup]{.inline-comment-marker data-ref=”8799b7f7-7399-4d1b-b934-a6296b045906”} is as follows:

Using the HERE lane group ID, find the 2nd closest group of traffic lights in front of us whose associated lane groups are all accessible from the current lane group. We take the 2nd closest because the closest should always be opposing our direction of travel and therefore, is not applicable to us. Any other traffic light groups further away would correspond to the next intersection down the road and are also not applicable to us.

We then perform the association between lane and traffic signal in [object tracking]{.inline-comment-marker data-ref=”05fa6df0-b43a-4baf-945a-cd6004b3fd32”}[ after we receive the group of traffic lights]{.inline-comment-marker data-ref=”05fa6df0-b43a-4baf-945a-cd6004b3fd32”} (I will call this group the received group). For the first time we see a set of traffic lights, we will consider the traffic lights we detected (I will this group detected group) which have a unique traffic direction. For example, we may detect a group of 4 traffic lights: the left one corresponds to going left and straight. the middle 2 corresponds only to straight and the rightmost one corresponds to going right and straight. Note the left and right most traffic lights do not have the same traffic directions as any of the other detected lights. Starting with the traffic light which corresponds to going left and straight, we will search in the received group for a light that has the same traffic directions. This is more reliable than finding the closest traffic light in the received group as it is entirely possible that the traffic lights we detect have an offset which skew a traffic light closer to an adjacent traffic light which may not have the same traffic directions. Let's consider if we only detected one of the middle 2 traffic lights. In this case, we will match it with closest traffic light in the received group which has the same traffic directions. Once we match a detected traffic light with a received traffic light, we can remove them from their respective lists and combine their information in a new traffic object.

For a set of detected traffic lights which all share the same traffic directions, we will take the safest signal out of all signals (safest → red → flashing → yellow → green → dangerous) and overwrite the colour of all other detected lights with the same traffic directions (We will go with the assumption that in the received group of traffic lights, lights that share the same traffic directions have the exact same lane associations). Since our detected lights are sorted from left to right, we can easily match them with the sorted received lights that have the same traffic directions. Similar to the individual traffic light case, we will take the leftmost traffic light of the detected group and match it with the closest traffic light in the corresponding received group and repeat this with the other detected lights to the right. (Its 1 am and I have work tmmr... I know this is a naive approach and theres plenty of hard counter examples you can think of, but this is a start. We can also consider taking the distance between the detected lights and comparing that to the distance between the received lights and make a judgement there)

Let us consider a case where we receive 3 traffic lights which all control the forwards direction. We may detect only 2 traffic lights that go forward. I[ts possible that our leftmost detected traffic light is closest to the rightmost received light. This leaves no logical received light for the 2nd detected light to match with. We will then shift the received lights "to the right" and repeat the matching process until all our detected lights are matched. (There are more cases, but I don't want to think rn .\^.)]

Once we have matched a traffic light, we can remove the pair from their own lists and combine the information. We will save the lane association data in member variables under the Traffic Light Tracked Object class so that we can use in intersections. Once the matching has been done, we will never modify its association again. We will note down any traffic directions in the received group that were addressed. These traffic directions will by default have the colour red.

Now that we have associated all the traffic signals with HERE lane

groups, we can generate a traffic message in the following format. We

will publish a TrafficAssociationList{.text .plain

style=”text-align: left;”} as soon as we receive traffic lights.

==============================================={.text .plain

style=”text-align: left;”}

# TrafficAssociation

-----------------------------------------------{.text .plain

style=”text-align: left;”}

Header header

# HERE Lane Group ID

int32 lane_group_id

uint32 lane_index

string TL_ST_NON=NON

` string TL_ST_RED=RED`

` string TL_ST_YEL=YELLOW`

` string TL_ST_GRE=GREEN`

` string TL_ST_FLA=FLASHING_RED`

` string colour`

==============================================={.text .plain

style=”text-align: left;”}

# TrafficAssociationList

-----------------------------------------------{.text .plain

style=”text-align: left;”}

Header header

TrafficAssociation[] traffic

==============================================={.text .plain

style=”text-align: left;”}

When we see traffic lights again that we know of (i.e. the traffic light we detect matches with a previous bounding box), we no longer need to query the service for the lane association and instead just look at what we saved before and go straight to generating the traffic message. In the case that we see 2 traffic lights we don't know of and know of respectively, we can recalculate the association again. (okay maybe we do need have the option of updating a traffic light's association).

The last part requires us to have object tracking working really well. It might get really flakey if keep losing track of traffic lights.

\

\

Attachments:

![]() Screen Shot

2020-03-17 at 1.34.16 PM.png

(image/png)\

Screen Shot

2020-03-17 at 1.34.16 PM.png

(image/png)\

Document generated by Confluence on Dec 10, 2021 04:02