watonomous.github.io

[ Software Division : Ego Localization Semantic Map ]

Created by [ Tony Jung], last modified on May 07, 2021

Currently, we have a prototype running of the semantic map based localization scheme.

The following are the steps to take to run it on the Carla simulator.

Note: Currently, the prototype is not merged into develop yet and is in /map_localization_algo_improvement branch.

1) Start the necessary containers

We need the following container profiles: path_planning, carla, tools

Make sure you have all of those set as active profiles in

dev_config.local.sh and execute watod up

\

Note: its important that you do not run the perception image as the topic names are the same and will get weird results

2) Run the env_model for ego localization

Go into the pp_env_model container and run the ego_localization_map_service node by executing:

rosrun pp_env_model ego_localization_map_service

3) Run the localization nodes

Go into the pp_ego_localization container and run the necessary nodes for the semantic map scheme. Execute:

roslaunch pp_ego_localization ego_localization_carla.launch

This will start the semantic map localization scheme as well as the visualization tool.

4) Visualize in Rviz

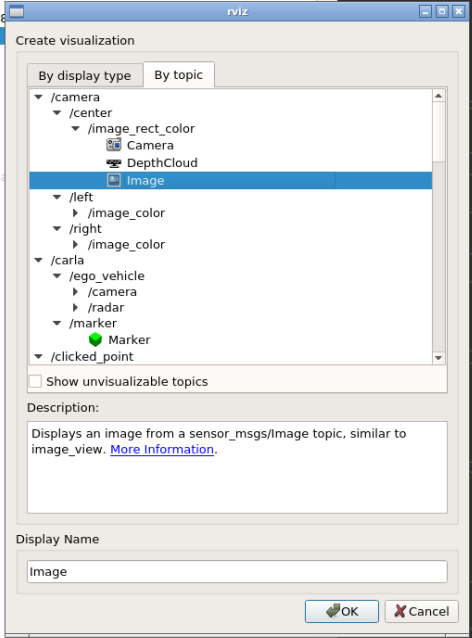

Now that everything is running, we can check on Rviz.

Open up the gui_tools container on the vnc viewer and open up rviz on it.

On rviz, add the following topics: /camera/center/image_rect_color as image and /ego_localization_rviz as image

[

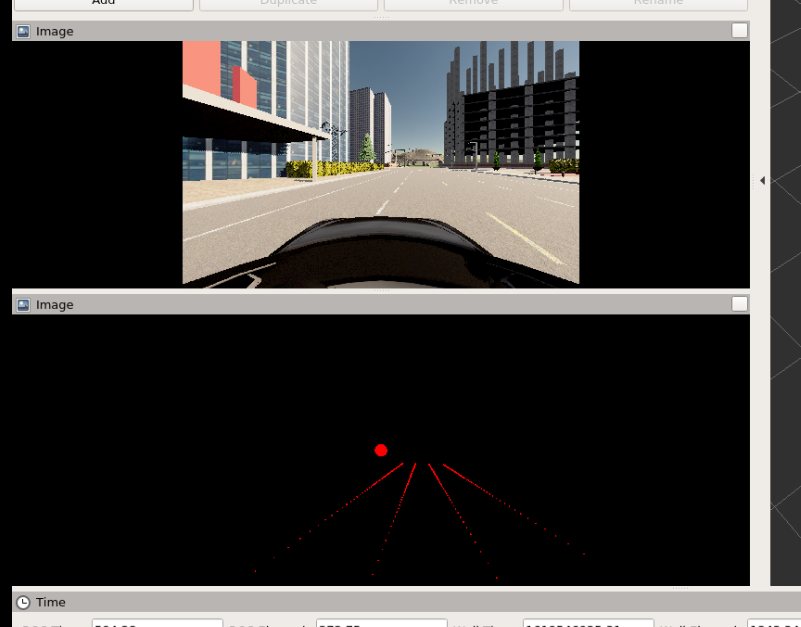

Then you will see the following two images on rviz:

[

The top is the center camera from carla and the bottom is the ego localization visualizer. The visualizer shows the 3D map lines projected onto the 2D camera frame of the center camera.

When the lines are red, it means perception is not detecting any lines. We can't run our localization algorithm without perception detections.

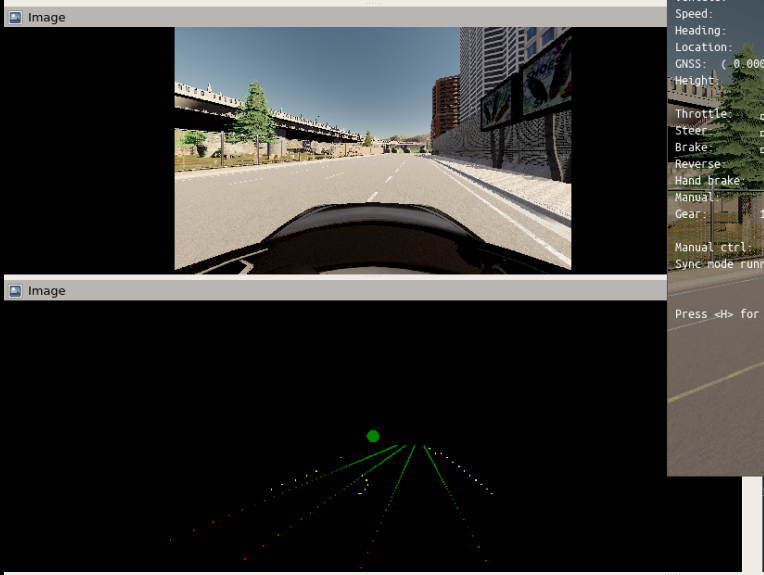

When perception (aka our carla test data node which acts as perception output) detects some lines, the color will turn green like so:

[

The yellow dots are the detections from perception.

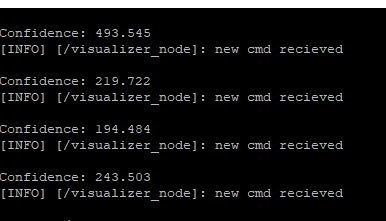

On the terminal where the localization scheme has been launched, you will see the confidence value calculated. The confidence value is distance from the perception detection and the map projections in pixels. The lower the more accurate.

[

\

[]{.expand-icon .aui-icon .aui-icon-small .aui-iconfont-chevron-down}[Running with actual perception detections]

Currently, the above steps runs the localization algorithm using test data from carla as the perception roadline detections so we can ignore the potential errors from the perception side.

If you want to use the actual perception detections you have to change two things:

1) Make sure you are running the perception docker profile.

2) Instead of launching ego_localization_carla.launch use ego_localization.launch

\

Attachments:

![]() image2021-4-27_11-7-51.png

(image/png)

image2021-4-27_11-7-51.png

(image/png)

![]() image2021-4-27_11-8-16.png

(image/png)

image2021-4-27_11-8-16.png

(image/png)

![]() image2021-4-27_11-9-1.png

(image/png)

image2021-4-27_11-9-1.png

(image/png)

![]() image2021-4-27_11-11-39.png

(image/png)

image2021-4-27_11-11-39.png

(image/png)

![]() image2021-4-27_11-17-58.png

(image/png)

image2021-4-27_11-17-58.png

(image/png)

![]() image2021-4-27_11-20-8.png

(image/png)\

image2021-4-27_11-20-8.png

(image/png)\

Document generated by Confluence on Dec 10, 2021 04:01